AI vocals are transforming how producers work, create, and collaborate. They’ve opened doors that once required studio sessions, travel budgets, and tight schedules. But with all that power, many producers are still falling into the same traps.

The problem isn’t AI. It’s how people use it. The difference between a synthetic voice and a real AI voice built from a professional singer is massive. One replaces the human touch. The other amplifies it. Understanding that distinction is what separates the hobbyists from the professionals.

Here are seven mistakes producers keep making and how to fix them if you care about the quality and integrity of your sound.

1. Treating AI as a replacement, not a collaborator

AI was never meant to replace singers. It was meant to help them reach further. The best producers know how to collaborate with technology rather than hide behind it.

When you use synthetic voices, you get an imitation of emotion, a surface-level copy of what a human can express. But when you work with AI models created from real singers, like those in Auribus, you get authenticity and control at once. You’re not erasing the artist; you’re extending their reach.

The magic happens when the technology feels invisible and the music still feels alive.

2. Ignoring consent and ethics

One of the biggest mistakes in modern music production is pretending that consent doesn’t matter. Some AI tools build their libraries by scraping voices from artists who never agreed to it. That’s not innovation; it’s digital theft.

At Auribus, every model is created in collaboration with real vocalists who give consent and share in the success of the platform. When a producer uses one of our voices, they know the artist is being compensated fairly. That’s how AI should work, empowering creativity while respecting the people who make it possible.

3. The hidden cost of bad vocal takes and the fast fix

Every producer knows this feeling. The singer is in the booth, the mix is ready, and suddenly the takes start piling up. Something doesn’t feel right, maybe it’s timing, tone, or energy. You tell yourself you’ll fix it later. But later always costs more than you think.

Bad vocals aren’t just frustrating; they’re expensive. They slow your process, kill momentum, and drain your creativity. The smart move isn’t endless retakes or patchwork edits. It’s using AI voices ethically sourced from real singers who already sound professional. You get the warmth, the presence, and the emotion without the chaos.

That’s not cheating. That’s efficient collaboration.

4. Overprocessing everything

One of the biggest mistakes producers make with AI vocals is trying too hard to make them sound “perfect.” Overprocessing with tuning, EQ, and compression turns human texture into plastic sound.

AI doesn’t need to sound flawless. It needs to sound believable. The subtle imperfections in a voice, a breath, a crack, a texture, are what connect listeners emotionally. Auribus models are designed to keep that authenticity intact because they come from real singers, not computer simulations.

5. Forgetting that context matters

A good producer understands that every project has its own sonic DNA. The same vocal model won’t fit both a cinematic score and an electronic track. Treating AI vocals as one-size-fits-all leads to sterile, generic productions.

The strength of AI is flexibility. The strength of Auribus is realism. Choosing the right voice model for each creative context is how you keep your music sounding intentional instead of automated.

6. Neglecting workflow integration

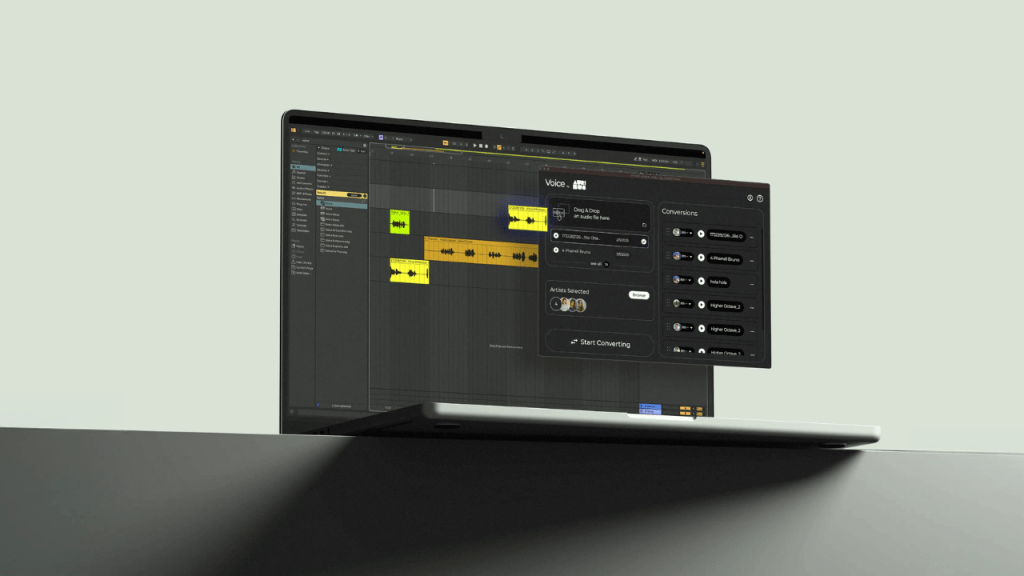

Your ideas move fast. Your tools should keep up. Many producers lose time using systems that don’t fit naturally into their workflow. Exporting files, switching apps, or reloading sessions breaks creativity.

AI vocals should feel native inside your DAW. With Auribus, that’s exactly how it works. You can experiment, render, and drop vocals directly in your mix without leaving your environment. It’s designed for professionals who care about quality and efficiency at the same time.

7. Ignoring the story behind the voice

Every AI voice has a story. Or at least, it should. Synthetic systems that mimic tone without credit erase the human behind the sound. But real AI voices, the kind built from partnerships with artists, carry identity and history.

When you use Auribus, you’re not sampling a faceless algorithm. You’re collaborating with a real vocalist, someone whose craft, time, and skill are respected and rewarded. That’s how we keep art alive while pushing technology forward.

The takeaway

AI is not the enemy of music. Misuse is. The future of music production doesn’t lie in synthetic shortcuts but in tools that combine human artistry with smart technology.

When you choose ethically built AI vocals that come from real singers, you’re not just improving your workflow. You’re helping create a sustainable ecosystem where artists stay active, valued, and fairly compensated.

Music evolves. Integrity should too.

👉 Discover how Auribus turns AI into collaboration, not imitation.