The secret no one talks about

Here is a question that makes producers uncomfortable.

If the vocals on your track were created with AI, and they sound completely real, do you have to tell anyone?

The technology is now good enough that most listeners would never know. They would just hear emotion, dynamics, and clarity. They would feel a voice that sounds human because, in many ways, it is. It is built from the data and performance of a real singer.

But this is where the debate begins.

Does authenticity depend on the truth behind the sound, or only on how it makes people feel?

The ethics behind the mix

For years, music has been about illusion.

Autotune, ghostwriting, and post-production have shaped how we experience sound. But AI vocals take that illusion one step further. They do not just enhance a performance. They can be the performance.

So what happens when the line between artist and algorithm disappears?

When a producer uses a synthetic voice built from a real singer without consent, that is not innovation. It is an imitation. But when the voice model was created ethically, with consent and fair payment, it becomes collaboration.

The difference is not in how real it sounds. It is in whether the people behind the sound were respected.

The illusion of “real”

Listeners fall in love with voices, not code. They connect to the tiny imperfections, the breath before a note, the strain in a high phrase. Those human details are what make a song believable.

If AI vocals capture that essence, is it dishonest to let the audience think it is a person singing in real time? Or is it simply the evolution of art, the next phase in how we express emotion through sound?

Maybe the question is not whether you should tell the listener, but whether you have earned the right to. If the voice you used was made from a real artist who gave consent and gets paid, then what you created is honest work. You are sharing art built on respect, not manipulation.

What transparency really means

Transparency does not always mean explaining every piece of technology. No one labels a song saying which microphone was used or which compressor shaped the tone. But when people are involved, when someone’s identity, voice, and craft are part of your song, they deserve recognition.

The listener may not need to know the technical side, but they deserve to experience music that was made ethically. That is real transparency. It is not about confessing that you used AI. It is about making sure that the artists behind it were treated as artists, not as data.

The Auribus perspective

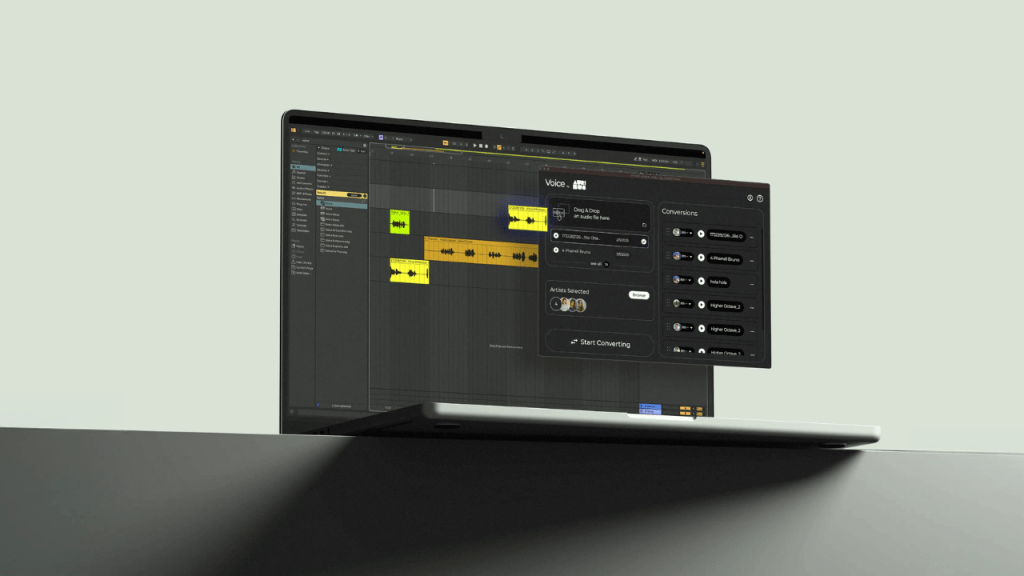

At Auribus, every AI voice comes from a real singer who chose to be part of this future. Every vocal model is based on consent, transparency, and fair pay. That means you are not hiding anything when you use these voices. You are honoring the human stories behind them.

Producers using Auribus are not pretending. They are collaborating. The result sounds human because it is human. It is the voice of technology working with people, not against them.

So should you tell the listener? That depends.

If your track was made with honesty, then the truth is already in the sound.

The takeaway

Music has always evolved through tools that change how we create. AI is not the end of authenticity. It is the next chapter of it. The only thing that separates art from imitation is integrity.

If your vocals sound real, make sure they are real in spirit — built on respect, collaboration, and fairness. That is the kind of truth the listener deserves to hear, even if you never have to say a word.

Auribus: Real Voices. Real Artists. Real Ethics.